Gram-Schmidt Procedure

Creating the Gram-Schmidt procedure in python

What is the Gram-Schmidt procedure?

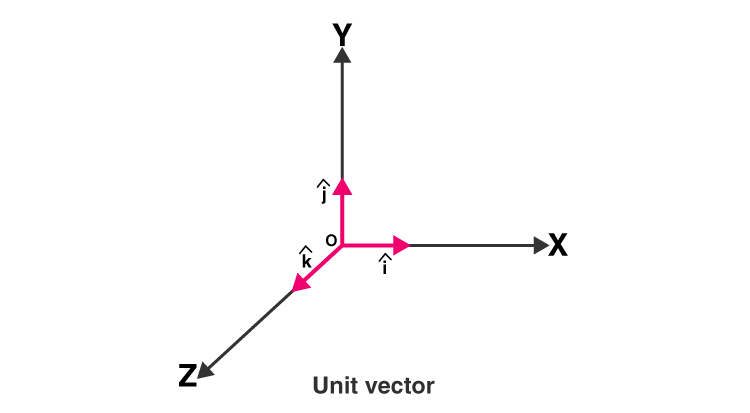

Today I have learned that the Gram-Schmidt procedure takes a list of vectors and creates an orthogonal (90 degrees in refernce to the basis vectors) basis from that set.

How are matrices showed in Python?

If ‘A’ is a Matrix then we can imagine it in memory as:

A[0, 0] A[0, 1] A[0, 2] A[0, 3]

A[1, 0] A[1, 1] A[1, 2] A[1, 3]

A[2, 0] A[2, 1] A[2, 2] A[2, 3]

A[3, 0] A[3, 1] A[3, 2] A[3, 3]

r = row

c = column

where we can access the value at each element by using

A(r, c).

We can also access a whole row at a time using

A(r)

or a whole column at a time by using

A(:, c)

They will always begin counting at 0. We can also take the dot product of two vectors with the @ symbol

Python code for the Gram-schmidt procedure.

It first shows how to do it for a defined 4x4 matrix and then defines a function for a general Matrix by using a for-loop.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

# GRADED FUNCTION

import numpy as np

import numpy.linalg as la

verySmallNumber = 1e-14 # That's 1×10⁻¹⁴ = 0.00000000000001

# Our first function will perform the Gram-Schmidt procedure for 4 basis vectors.

# We'll take this list of vectors as the columns of a matrix, A.

# We'll then go through the vectors one at a time and set them to be orthogonal

# to all the vectors that came before it. Before normalising.

# Follow the instructions inside the function at each comment.

# You will be told where to add code to complete the function.

def gsBasis4(A) :

B = np.array(A, dtype=np.float_) # Make B as a copy of A, since we're going to alter it's values.

# The zeroth column is easy, since it has no other vectors to make it normal to.

# All that needs to be done is to normalise it. I.e. divide by its modulus, or norm.

B[:, 0] = B[:, 0] / la.norm(B[:, 0])

# For the first column, we need to subtract any overlap with our new zeroth vector.

B[:, 1] = B[:, 1] - B[:, 1] @ B[:, 0] * B[:, 0]

# If there's anything left after that subtraction, then B[:, 1] is linearly independant of B[:, 0]

# If this is the case, we can normalise it. Otherwise we'll set that vector to zero.

if la.norm(B[:, 1]) > verySmallNumber :

B[:, 1] = B[:, 1] / la.norm(B[:, 1])

else :

B[:, 1] = np.zeros_like(B[:, 1])

# Now we need to repeat the process for column 2.

# Insert two lines of code, the first to subtract the overlap with the zeroth vector,

# and the second to subtract the overlap with the first.

B[:, 2] = B[:, 2] - B[:, 2] @ B[:, 0] * B[:, 0]

B[:, 2] = B[:, 2] - B[:, 2] @ B[:, 1] * B[:, 1]

# Again we'll need to normalise our new vector.

# Copy and adapt the normalisation fragment from above to column 2.

if la.norm(B[:, 2]) > verySmallNumber :

B[:, 2] = B[:, 2] / la.norm(B[:, 2])

else :

B[:, 2] = np.zeros_like(B[:, 2])

# Finally, column three:

# Insert code to subtract the overlap with the first three vectors.

B[:, 3] = B[:, 3] - B[:, 3] @ B[:, 0] * B[:, 0]

B[:, 3] = B[:, 3] - B[:, 3] @ B[:, 1] * B[:, 1]

B[:, 3] = B[:, 3] - B[:, 3] @ B[:, 2] * B[:, 2]

# Now normalise if possible

if la.norm(B[:, 3]) > verySmallNumber :

B[:, 3] = B[:, 3] / la.norm(B[:, 3])

else :

B[:, 3] = np.zeros_like(B[:, 3])

# Finally, we return the result:

return B

# The second part of this exercise will generalise the procedure.

# Previously, we could only have four vectors, and there was a lot of repeating in the code.

# We'll use a for-loop here to iterate the process for each vector.

def gsBasis(A) :

B = np.array(A, dtype=np.float_) # Make B as a copy of A, since we're going to alter it's values.

# Loop over all vectors, starting with zero, label them with i

for i in range(B.shape[1]) :

# Inside that loop, loop over all previous vectors, j, to subtract.

for j in range(i) :

# Complete the code to subtract the overlap with previous vectors.

# you'll need the current vector B[:, i] and a previous vector B[:, j]

B[:, i] = B[:, i] - B[:, i] @ B[:,j] * B[:,j]

# Next insert code to do the normalisation test for B[:, i]

if la.norm(B[:,i]) > verySmallNumber :

B[:, i] = B[:, i] / la.norm(B[:, i])

else :

B[:, i] = np.zeros_like(B[:, i])

# Finally, we return the result:

return B

# This function uses the Gram-schmidt process to calculate the dimension

# spanned by a list of vectors.

# Since each vector is normalised to one, or is zero,

# the sum of all the norms will be the dimension.

def dimensions(A) :

return np.sum(la.norm(gsBasis(A), axis=0))

- Written December 17, 2023

Derived from a coding lab in a coursera class titled “ Mathematics for Machine Learning: Linear Algebra” from the Imperial College London.

This post is licensed under CC BY 4.0 by the author.